How does YouTube’s content moderation policy affect free speech. What are the main criticisms of YouTube’s code of conduct. Why do some creators feel unfairly targeted by YouTube’s policies. How can YouTube balance advertiser interests with creator freedom. Is there evidence of ideological bias in YouTube’s moderation practices. What reforms could improve YouTube’s content policies. How do YouTube’s rules compare to other social media platforms.

The Evolution of YouTube’s Content Moderation Policies

YouTube’s journey from a platform with no rules to one with a comprehensive code of conduct has been marked by controversy and debate. As the site grew in popularity, it faced increasing pressure to combat disturbing and illegal content. This led to the introduction of formal Community Guidelines in 2012, which have since undergone multiple revisions.

These guidelines now cover a wide range of issues, including:

- Hate speech

- Harassment

- Dangerous content

- Nudity

- Spam

- Threats

- Cyberbullying

While these rules may seem reasonable on paper, their implementation has often been problematic. YouTube relies on a combination of machine learning algorithms and human reviewers to identify rule-breaking content. However, this system has faced criticism for its inconsistency and lack of transparency.

The Challenges of Large-Scale Content Moderation

How does YouTube manage to moderate content on such a massive scale? The platform employs thousands of human reviewers who work alongside AI algorithms to flag and remove violating content. However, this approach has several limitations:

- Human reviewers often receive inadequate training and are expected to make complex decisions about speech issues in a matter of seconds.

- AI algorithms, while capable of processing vast amounts of data, can struggle with nuance and context.

- The sheer volume of content uploaded to YouTube (over 500 hours every minute) makes comprehensive moderation a Herculean task.

These challenges have led to numerous instances of incorrect flagging or demonetization, frustrating content creators and viewers alike.

The Influence of Advertisers on YouTube’s Policies

One of the primary drivers behind YouTube’s strict content policies is the platform’s reliance on advertising revenue. Brands are understandably cautious about where their ads appear, and YouTube has implemented several measures to appease advertisers:

- Keyword filtering that automatically demonetizes videos mentioning sensitive topics

- The Partner Program, which gives advertisers extensive control over where their ads appear

- Stringent content guidelines that err on the side of caution

This advertiser-driven approach has led to a situation where commercial interests often take precedence over other considerations, including creator freedom and diverse content.

The Impact on Content Creators

How have YouTube’s policies affected content creators? Many YouTubers have reported experiencing:

- Sudden demonetization of videos without clear explanation

- Difficulty appealing content strikes or demonetization decisions

- Uncertainty about what content is allowed, leading to self-censorship

- Frustration with the platform’s lack of transparency and communication

These issues have led to growing mistrust between creators and the platform, with some popular YouTubers exploring alternative platforms or diversifying their income streams to reduce reliance on YouTube ad revenue.

Accusations of Ideological Bias in Content Moderation

Beyond commercial considerations, YouTube has faced allegations of political bias in its content moderation practices. Some critics, particularly from conservative circles, argue that the platform systematically silences right-leaning perspectives.

Evidence cited by those claiming bias includes:

- Disproportionate demonetization or suspension of conservative commentators

- Ambiguous rules that allow for selective enforcement against disfavored speakers

- Perceived leniency towards left-leaning content that appears to violate similar guidelines

However, YouTube and its defenders argue that the platform applies its rules equally to extremists on both the left and right. They contend that any apparent bias is a result of uneven rule-breaking rather than ideological targeting.

Examining the Evidence of Bias

Is there concrete evidence of systematic bias in YouTube’s moderation practices? While anecdotal examples of seemingly unfair treatment exist, proving systemic bias has proven challenging. Several factors complicate the issue:

- The opacity of YouTube’s decision-making process makes it difficult to analyze patterns in moderation.

- The sheer volume of content on the platform means that inconsistencies in enforcement are inevitable.

- Confirmation bias may lead users to notice and remember instances that align with their preexisting beliefs about bias.

While individual moderators may have personal biases that influence their decisions, evidence of intentional, platform-wide censorship of specific ideologies remains inconclusive.

Balancing Free Speech and Content Moderation

At the heart of the controversy surrounding YouTube’s policies is the challenge of balancing free speech with the need to maintain a safe and advertiser-friendly platform. This balancing act raises several important questions:

- Where should the line be drawn between protecting users and stifling expression?

- How can YouTube maintain its commercial viability without compromising on diverse content?

- What responsibility does a private platform like YouTube have in upholding free speech principles?

These questions have no easy answers, but they are crucial to address as online platforms continue to play an increasingly central role in public discourse.

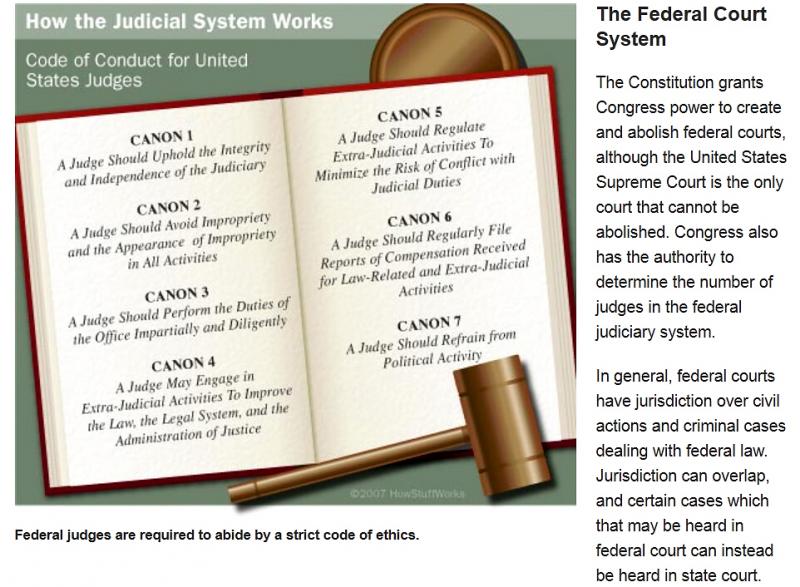

The Role of Section 230

How does Section 230 of the Communications Decency Act impact YouTube’s content moderation practices? This crucial piece of legislation provides online platforms with immunity from liability for user-generated content. It also allows platforms to moderate content without being treated as publishers.

Section 230 has been both praised and criticized:

- Supporters argue it enables free speech online by protecting platforms from endless lawsuits

- Critics contend it gives platforms too much power to censor without accountability

Any changes to Section 230 could have significant implications for YouTube’s approach to content moderation.

Comparing YouTube’s Policies to Other Platforms

To better understand YouTube’s approach to content moderation, it’s helpful to compare it with other major social media platforms. How do YouTube’s policies stack up against those of Facebook, Twitter, and TikTok?

| Platform | Content Policy Strictness | Transparency | Appeals Process |

|---|---|---|---|

| YouTube | High | Medium | Limited |

| High | Medium-High | Moderate | |

| Medium | High | Moderate | |

| TikTok | High | Low | Limited |

While all major platforms face similar challenges, YouTube’s policies are generally considered to be among the strictest, particularly when it comes to content monetization.

Lessons from Other Platforms

What can YouTube learn from the content moderation practices of other platforms? Several approaches stand out:

- Twitter’s policy of labeling potentially misleading content rather than removing it outright

- Facebook’s creation of an independent Oversight Board to review contentious moderation decisions

- Reddit’s community-driven moderation model, which allows for more nuanced, context-specific enforcement

By studying and potentially adopting some of these practices, YouTube could improve its own content moderation system.

Proposed Reforms to Improve YouTube’s Policies

Given the widespread criticism of YouTube’s current content moderation approach, what reforms could help address these concerns? Several potential improvements have been suggested by creators, policy experts, and users:

- Increased Transparency: Provide clearer explanations for content removals and demonetizations

- Improved Appeals Process: Implement a more robust and responsive system for appealing moderation decisions

- External Oversight: Create an independent body to review controversial moderation cases

- Clearer Guidelines: Simplify and clarify content rules to reduce ambiguity and inconsistency

- Creator Consultation: Involve content creators more directly in policy development and refinement

- Graduated Enforcement: Implement a more nuanced system of warnings and penalties for policy violations

- AI Refinement: Improve machine learning algorithms to better understand context and nuance

Implementing these reforms could help YouTube strike a better balance between content moderation and creator freedom, potentially addressing many of the current criticisms.

The Challenges of Reform

Why hasn’t YouTube already implemented these seemingly obvious improvements? Several factors complicate the reform process:

- Scale: Any changes must be implementable across billions of videos and users

- Legal Concerns: Reforms must navigate complex legal and regulatory landscapes across multiple jurisdictions

- Commercial Pressures: Changes that risk advertiser relationships could threaten YouTube’s business model

- Technical Limitations: Some proposed reforms may be difficult to implement given current technology

Despite these challenges, the pressure for meaningful reform continues to grow as YouTube’s influence on public discourse expands.

The Future of Content Moderation on YouTube

As technology evolves and societal expectations shift, how might YouTube’s approach to content moderation change in the coming years? Several trends and possibilities emerge:

- Increased use of AI and machine learning for more nuanced content analysis

- Greater collaboration with academic researchers to study the impacts of different moderation approaches

- Potential regulatory changes that could force platforms to adopt new moderation practices

- Exploration of decentralized or blockchain-based content moderation systems

- Development of more sophisticated content rating systems to give users greater control over what they see

These potential developments could reshape YouTube’s content landscape, potentially addressing many of the current criticisms while introducing new challenges and debates.

The Role of User Empowerment

How can YouTube empower users to play a more active role in content moderation? Several approaches could be considered:

- Enhanced content filtering options allowing users to customize their viewing experience

- Community moderation features that leverage the collective wisdom of users

- Improved media literacy resources to help users critically evaluate content

- Greater transparency about recommendation algorithms to give users more control over their feeds

By giving users more tools and information, YouTube could potentially reduce the need for top-down moderation while fostering a more engaged and discerning user base.

The Broader Implications of YouTube’s Content Policies

YouTube’s content moderation practices have implications that extend far beyond the platform itself. As one of the world’s largest sources of online video content, YouTube’s policies can significantly impact:

- Public discourse and the spread of information

- Political and social movements

- Cultural trends and norms

- Educational resources and access to knowledge

- Economic opportunities for content creators

Given these far-reaching effects, the debate over YouTube’s content policies is likely to remain a crucial issue in discussions about online governance and digital rights.

The Global Impact of YouTube’s Policies

How do YouTube’s content moderation practices affect users and creators in different parts of the world? The platform’s global reach means that its policies can have varied impacts depending on local contexts:

- In countries with limited press freedom, YouTube may serve as a crucial platform for alternative voices

- Cultural differences can lead to misunderstandings or inconsistencies in content moderation

- Language barriers may result in uneven enforcement of policies across different regions

- Geopolitical tensions can complicate YouTube’s efforts to remain neutral in content disputes

These global considerations add another layer of complexity to YouTube’s content moderation challenges, highlighting the need for nuanced, context-aware policies.

Introduction to YouTube’s controversial code of conduct policies

YouTube’s content moderation policies have long been a source of controversy and debate. With over 2 billion monthly users, YouTube wields immense power to shape online discourse. Yet many feel the platform’s rules unfairly restrict freedom of expression. This article examines the key criticisms surrounding YouTube’s code of conduct, analyzing where policies may have gone too far.

As an avid YouTube viewer myself, I’ve noticed many confusing inconsistencies in the site’s enforcement. Seemingly benign videos get demonetized or removed, while edgy pranks and offensive content slip through the cracks. Trying to understand YouTube’s byzantine moderation is like navigating a maze blindfolded. Policies are vague, strikes are handed out secretively, and appeals are rarely successful. For creators, this opacity breeds deep frustration and mistrust.

In my view, YouTube’s strict policies likely stem from advertiser pressure. Brands don’t want their ads running before controversial videos, so YouTube plays it safe. But in trying to sanitize the platform, YouTube risks losing the vibrant creativity that made it beloved. It’s a tough balancing act between protecting revenue and protecting free speech.

A brief history of moderator controversies

YouTube’s code of conduct has always leaned towards censorship over openness. When the platform debuted in 2005, no rules were in place initially. But as the site grew, YouTube faced growing calls to combat disturbing or illegal material.

By 2012, policies were formalized in the first Community Guidelines. They banned hate speech, harassment, dangerous content and more. Further revisions added restrictions on nudity, spam, threats and cyberbullying. On paper, these rules seem quite reasonable.

In practice however, enforcement has been haphazard. YouTube relies on machine learning and human reviewers to identify rule-breaking uploads. But reviewers receive little training and have to judge complex speech issues in mere seconds. Predictably, many videos get flagged or demonetized incorrectly.

High-profile YouTubers began speaking out, arguing the platform was needlessly censorious and politically biased. But YouTube typically doubles down on disputed moderation decisions. Only sustained public pressure, lawsuits, or media coverage force the company to walk back unpopular bans.

How advertisers influence YouTube’s policies

So why is YouTube so quick to censor? In two words: ad revenue. YouTube depends on selling ads placed before and during videos. Advertisers understandably don’t want their brands associated with offensive or disturbing content.

To keep advertisers happy, YouTube employs keyword filtering that automatically demonetizes videos mentioning sensitive topics like racism or violence. Many educational or artful videos get caught in this coarse content filter.

YouTube also grants advertisers extensive controls through its Partner Program. Brands can blacklist certain channels or videos from carrying their ads. This motivates YouTube to be even more conservative in its moderation, to avoid running afoul of skittish advertisers.

In essence, policies are driven less by genuine principles than by commercial incentives. YouTube operates on thin margins, so preserving ad revenue trumps most other considerations.

YouTube accused of ideological bias

Beyond commercial factors, some allege YouTube also skews moderation to disadvantage certain political viewpoints. Conservatives in particular feel the platform systematically silences right-leaning perspectives.

Detractors point to many prominent conservative commentators receiving ad demonetization, scrutiny, or suspension – often over relatively tame opinions. The ambiguity of YouTube’s rules allows for selective weaponization against disfavored speakers.

Defenders counter that the platform equally enforces neutral principles against extremists on the left and right. Rule-breaking rhetoric gets punished regardless of ideology, not because of it, they argue.

The truth likely lies somewhere in the middle. Given human bias, some moderators probably act on political preferences consciously or not. But systemic singling out of conservatives remains unproven. The evidence suggests overzealousness, not intentional censorship.

What reforms could improve YouTube’s policies?

YouTube deserves credit for trying to make complex moderation decisions at massive scale. But improvements clearly seem needed to make policies fairer and more transparent.

First, YouTube should simplify its dense legalese guidelines into easy-to-understand rules normal users can actually follow. Vagueness invites uneven enforcement.

Second, the appeals process needs reform. Currently, rejected appeals disappear into a bureaucratic black hole. Adding some external oversight could make the system more accountable and impartial.

Finally, YouTube should be more open about how videos get flagged and why. Moderation aided by AI should still have a human in the loop before enacting demonetization or suspension.

With a few commonsense tweaks, YouTube’s code of conduct could become far less mystifying and draconian. The platform may never satisfy all critics, but clearly improvements represent low-hanging fruit.

In closing, content moderation at YouTube’s size is an intractable balancing act. No solution perfectly reconciles free speech and brand safety. But by collaborating with creators, YouTube can craft policies that feel less arbitrary, while still keeping the peace with advertisers.

History of YouTube’s moderation and demonetization controversies

Since its launch, YouTube has struggled to find the right balance between open expression and responsible moderation. The platform’s rocky enforcement history reveals the difficult tradeoffs involved in regulating such a vast user-generated site.

In the early years, YouTube took a largely hands-off approach, relying on community flagging to identify problematic uploads. But as the site exploded in popularity, pressure mounted to tackle objectionable material proactively. Advertisers, regulators, and media critics all urged stronger top-down moderation.

YouTube responded in 2012 by rolling out its first formal content policies. These guidelines banned nudity, graphic violence, hate speech, harassment, and other egregious content. On paper, the rules seemed sensible enough.

In practice, however, enforcement proved inconsistent and controversial. YouTube relied on a blend of automated filters and outsourced human reviewers to identify rule-breaking uploads. But with over 400 hours of new content uploaded every minute, mistakes were inevitable.

Many educational, artistic, or journalistic videos were erroneously flagged as inappropriate by imperfect algorithms. Frustrated creators complained the takedowns were heavy-handed and allowed for little recourse. YouTube’s opacity about review processes didn’t help either.

Alongside rules against offensive material, YouTube also began demonetizing videos deemed “not advertiser-friendly”. Topics like politics, sexuality, and social issues were seen as too sensitive for many brands. Again, creators criticized the broad demonetization dragnet for being overzealous.

By 2016, discontent was reaching a boiling point. High-profile YouTubers with millions of subscribers spoke out publicly against the platform’s haphazard and opaque moderation. Hashtags like #YouTubeIsOverParty spread across social media.

Bowing to the backlash, YouTube apologized and promised creators a more open line of communication moving forward. But controversies continued to flare up periodically, suggesting deeper issues with the platform’s moderation system.

Today, striking the right balance remains challenging. But many believe YouTube could do more to bring transparency, accountability, and consistency to its policies and enforcement.

With thoughtful reforms, perhaps YouTube could uphold community standards while avoiding the over-censorship that has too often silenced legitimate voices on the platform.

Criticisms of YouTube for being overly strict and biased

One of the most common complaints against YouTube is that its moderation policies are far too draconian, restricting speech that should be permitted in the name of open discourse and creativity.

Many argue that under the guise of protecting users, YouTube’s rules end up silencing legitimate opinions, especially those at the fringes of acceptability. The haziness of guidelines leads to the removal of videos that actually foster healthy debate, even if controversial.

For instance, YouTube has been criticized for overly aggressive stifling of political commentary from all sides. Channels discussing sensitive current events often get demonetized or banned, even if making thoughtful arguments within fair use rules.

Commentators allege that even measured critique of certain social issues is barred, because YouTube deems the topics inherently “unsafe” for monetization. But blocking earnest discussion does more harm than good, they contend.

Another common complaint is inconsistent and biased enforcement of policies. YouTube has been accused of targeting certain creators for removal more than others, even for similar content that seemingly violates the same community standards.

Critics argue that YouTube moderators sometimes act on unconscious bias, or bend to external pressure, rather than adhering to rules objectively. This leads to animosity when similar cases receive different treatment.

Specifically, many feel YouTube harbors a double standard against videos deemed right-wing or conspiratorial. Some see a pattern of stricter policing of controversial conservative channels, beyond normal policy application.

However, defenders counter that enforcement appears uneven because bans rightly focus on extreme rule-breaking rhetoric, which tends to come more from one side of the political spectrum.

In the end, dispelling suspicions of overreach or bias requires YouTube to bring more transparency to the moderation process. Clearer policy logic and accountability could ease concerns that certain voices are unfairly silenced or singled out on the platform.

Analyzing YouTube’s motivations – balancing free speech vs brand safety

When examining the strictness of YouTube’s policies, it helps to analyze the company’s underlying motivations. YouTube finds itself perennially pulled between two competing interests – defending free speech versus attracting advertisers.

On one hand, YouTube wants to provide an open platform for all voices to be heard. Imposing too many speech restrictions would undermine the site’s identity as a hub of diverse creativity.

But on the other hand, YouTube depends heavily on revenue from ads placed alongside videos. Advertisers understandably don’t want their brands promoted next to controversial, offensive, or disturbing content.

This tension forces YouTube to walk a tightrope. The company must satisfy both creators who want maximal creative freedom, and advertisers who want minimal brand risks. Finding the right balance is a complex, never-ending challenge.

Critics argue YouTube goes too far trying to sanitize the platform and cater to squeamish advertisers. Even mildly provocative content faces demonetization or removal in the name of “brand safety.”

But YouTube responds that most advertisers refuse to distinguish between reasonable and truly objectionable content. Stricter policies are necessary to keep the ad dollars flowing in.

Some argue YouTube should do more to pressure brands to direct ads only away from truly harmful videos, while permitting monetization elsewhere. But advertisers hold significant leverage in the relationship.

YouTube must also consider its own public reputation. If scandals erupt around offensive videos, the company feels pressure to tighten rules – even at the cost of open expression.

At the end of the day, YouTube operates a business, not a public forum. As much as it may value creator satisfaction, the company’s financial incentives require prioritizing brand safety over maximal free speech.

Whether this tradeoff is ethical or not remains worthy of debate. But the tensions help explain, if not excuse, why YouTube’s policies often veer towards over-censorship.

Most controversial YouTube bans and demonetizations over the years

Since tightening its policies, YouTube has been embroiled in many high-profile controversies regarding video takedowns and demonetization. These incidents shine a spotlight on the complexities and inconsistencies of YouTube’s moderation.

One early flap happened in 2012 when YouTube banned videos promoting certain gun accessories. Critics saw it as political censorship of legal firearm equipment. But YouTube argued the videos violated rules against promoting sales of dangerous products.

In 2015, YouTube creators became irate when monetization was stripped from any videos containing LGBTQ+ content. YouTube eventually admitted its filters had been overzealous and agreed to modify its approach.

But LGBTQ+ videos continued causing issues, such as in 2019 when creator Carlos Maza was repeatedly harassed in homophobic rants. Despite violating policies against hate speech, YouTube initially refused to remove the offending videos.

On the political front, vlogger Steven Crowder received backlash in 2019 for racist, sexist attacks on journalist Carlos Maza in several clips. Again, YouTube was reluctant to enforce its own policies until a major public shaming.

Conservative channels in general complain of frequent demonetization despite not clearly violating rules. Some see political bias at play, but YouTube claims policies are enforced neutrally.

Beyond politics, YouTubers have been angered by automated bans on videos about suicide awareness, pornography addiction recovery, and cannabis use – even in legal contexts. Too often legitimate content gets swept up by imprecise filters.

Then in 2020, a group of doctors had their videos discussing potential Covid treatments removed from YouTube. The site said the claims lacked scientific consensus, sparking censorship allegations.

Most recently, YouTube announced plans to remove “harmful misinformation” about vaccines – another well-intentioned but vague policy that could silence reasonable opinions.

While policies aim to make YouTube safer, inconsistent and overzealous enforcement continues generating outrage from those who feel unjustly silenced. Better human oversight of bans could help prevent future backlash.

High profile creators speak out against YouTube’s policies

As frustration with YouTube’s moderation mounted, many of the platform’s biggest stars began publicly criticizing its code of conduct as unfair and overly restrictive.

One notable example came in 2016 when mega YouTuber PewDiePie, who commands over 100 million subscribers, made several videos slamming YouTube’s ramped up demonetization efforts.

PewDiePie accused YouTube of being overzealous and sweeping up educational/commentary videos along with truly inappropriate content. He warned YouTube was alienating creators crucial to the platform’s popularity.

In 2017, comedian JonTron released a video titled “YouTube has a free speech problem” in response to policies censoring controversial political opinions. He argued YouTube suppressed certain viewpoints under the guise of neutrality.

After facing her own demonetization issues, media critic Anita Sarkeesian said YouTube was “kneecapping creators” with misguided policies aimed at pleasing advertisers rather than serving users.

LGBTQ advocate Tyler Oakley also called out YouTube for over-filtering, saying bans on LGBTQ content wrongly equated queer users with pornography and risked increasing stigma.

Joe Rogan, h3h3, Boogie2988 and dozens more popular YouTubers have aired similar grievances. They argue that even if policies have good intentions, sloppy enforcement has spiraled out of control.

This outcry holds power since top creators drive so much of YouTube’s traffic and revenue. By spotlighting flaws, they hope to pressure YouTube into rethinking its heavy-handed approach.

YouTube increasingly has to choose between the wishes of corporations and the needs of the talent that built the platform. Listening more to creators could help reach a better balance.

Arguments that policies unfairly target edgy or political content

One frequent complaint against YouTube is that its restrictive policies disproportionately impact creators making edgy or political content, even when they aren’t directly violating rules.

Channels focused on controversial subjects like sexuality, social justice, politics, philosophy and more say they are unfairly targeted by filters looking to sanitize the platform of potentially “unsafe” topics.

For example, LGBTQ+ vloggers often have their videos suppressed or demonetized seemingly just for discussing queer identities and experiences. Even supportive and educational content gets flagged, stifling open dialogue.

Political commentators similarly report being placed under tighter scrutiny, with monetization revoked for thoughtful discussions of divisive current events and ideologies. YouTube is accused of deeming entire topics inappropriate.

Some allege YouTube censors fringe political perspectives that challenge mainstream narratives, under the guise of protecting users. But suppressing dissenting voices seems more harmful than allowing free debate.

Comedians also frequently protest the removal of edgy joke content deemed offensive, even though humor can have social value. Ambiguous policies fail to distinguish between mean-spirited and subversive satire.

While enhanced moderation aims to make YouTube more family-friendly, critics argue it creates a dull homogenization of viewpoints. Provocative creators feel forced to self-censor or face consequences.

YouTube responds it applies policies evenly based on behavior, not specific identities or ideologies. But the platform’s uneasy relationship withroversial speech remains a lingering issue.

Better human oversight of bans, and tighter focus on truly dangerous content, could help preserve YouTube as a hub for boundary-pushing expression.

How advertisers and corporate sponsors influence YouTube’s decisions

It’s impossible to fully understand YouTube’s moderation policies without considering the outsized role of advertisers and corporate sponsors in shaping the platform’s decisions.

YouTube relies heavily on ad revenue, with brands paying to promote products through video ads running before and during content. This gives advertisers immense sway over YouTube’s policies.

Fearful of placements next to controversial content, many brands employ keyword blocking to keep ads away from certain topics. YouTube is then pressured to demonetize those same topics to appease sponsors.

Advertisers also whitelist specific channels considered “brand safe.” This motivates YouTube to play favorites with major corporate partners and popular creators who attract the most ad dollars.

In essence, policies now function partly to protect advertising opportunities rather than just uphold ethics. Rules are tightened to enhance monetization at the cost of open expression.

YouTube also partners directly with big brands to fund original programming and celebrity content. These deals come with demands to keep the platform “advertiser-friendly.”

All of this nudges YouTube to prioritize the interests of sponsors over creators or users. Concerns about PR and revenue drive the precautionary impulse towards overpolicing.

Of course, YouTube cannot afford to ignore the wishes of the talent that brings in viewers. But overall, the scales tilt towards satisfying the brands that pay the bills.

For critics, excessive advertiser accommodations risk stripping away everything novel and authentic about YouTube. But the company shows little desire to jeopardize its ad income, whatever the creative cost.

Reforming this commercial dynamic poses a significant challenge in the search for better editorial policies on the platform.

Accusations of ideological bias and inconsistent policy enforcement

YouTube stands accused not only of overreach in its moderation, but also of inconsistently applying policies in ways that disproportionately impact certain ideological groups.

In particular, many conservatives feel YouTube has systematically targeted right-leaning channels for demonetization and suspension while overlooking comparable behaviors from liberal creators.

For example, bombastic conservative commentators are often sanctioned for aggressive language, while equally uncivil liberal pundits go unpunished. To some, this amounts to political censorship.

There is also a sense that YouTube holds entertainers on the right to stricter standards of conduct. Crass liberal comedians are given more leeway for provocative jokes compared to counterparts on the right.

Defenders argue YouTube acts in good faith to enforce neutral guidelines uniformly. If conservatives face more bans, the theory goes, it’s because they are more likely to violate hate speech and harassment rules.

But selectivity in enforcement lends credence to claims of bias. YouTube allows progressive viral stars to thrive despite bullying and vulgar antics. But those on the right get less benefit of the doubt.

Whether intentional or not, these double standards contribute to the sense that YouTube has an agenda – or at least suffers from political homogeneity among reviewers.

Truly equitable policy requires holding creators to consistent norms regardless of ideology. The issues are complex, but YouTube could take steps to apply rules more even-handedly.

Simpler, clearer guidelines and more transparency around enforcement would help dispel suspicions of unfairness. Accountability is key to regaining trust.

YouTube’s lack of transparency around strikes and appeals process

One of the most common grievances creators voice about YouTube moderation is the platform’s opacity around issuing strikes and handling appeals.

When a video is removed or demonetized, the associated channel receives a vague alert citing a broad policy violation. But creators are given little specific detail on why the content was deemed objectionable.

Without understanding the exact infraction, YouTubers struggle to avoid repeats or argue their case. TheStrike system in particular is cryptic, with three strikes potentially resulting in account termination.

Appealing disciplinary actions is also notoriously futile on YouTube. Creators report an almost 100% denial rate, with appeals disappearing into a bureaucratic void. Some violations seem impossible to refute under the system.

This lack of responsiveness spurs accusations that YouTube employs automated form letter rejections. Even humans reviewing appeals appear unable to override previous decisions under rigid protocols.

In the rare instance a strike gets retracted after public scrutiny, the platform generally frames it as a glitch rather than a mistaken judgment. Transparency remains minimal.

For creators whose livelihoods depend on the platform, being at the mercy of an opaque disciplinary regime triggers deep anxiety. Clearer processes could ease a lot of confusion and mistrust.

Many believe YouTube owes creators timely notifications, specific infractions, opportunities for reform, and good faith dialogue. Greater openness would convey respect, not just control.

YouTube has no easy task balancing competing interests. But improving policy communication represents low-hanging fruit on the road to rebuilding trust.

Effects of over-moderation – creator burnout and loss of authenticity

While tighter control aims to improve YouTube, excessive moderation also risks unintended consequences that could fundamentally alter the platform’s character.

Overzealous policing wears on creators mentally, killing passion and causing burnout. Living in constant fear of the ban hammer saps motivation and creativity.

It also pressures YouTubers to artificially sanitize their content. Edgy jokes, unconventional opinions, and controversial topics get filtered out. Everything becomes standardized and advertiser-friendly.

In time, this could strip YouTube of the authenticity that made it a cultural phenomenon. The site thrived as a safe space for weird, raw, unfiltered expression outside the mainstream.

As moderation expands, creators morph into benign corporate-friendly content machines. Provocative voices get subdued or squeezed out altogether. Conformity replaces risk-taking.

Sure, shock jocks and toxicity decrease. But thoughtful yet edgy commentary also gets suppressed in the crusade for squeaky clean palatability.

Heavy restrictions could even jeopardize YouTube’s value for marginalized groups, who found empowerment through sharing their unconventional experiences.

There are no easy solutions for YouTube. But recognizing over-moderation’s chilling effects may help rein in the impulse towardscontrol before creators flee for more open platforms.

With care, the site can remove truly harmful content while still providing space for unorthodox voices that expand our horizons.

Calls for YouTube to be more clear, reasonable and accountable

Amid growing backlash, creators and critics are pressuring YouTube to reassess its approach to moderation. Many argue the platform must become more clear, reasonable and accountable in enforcing policies.

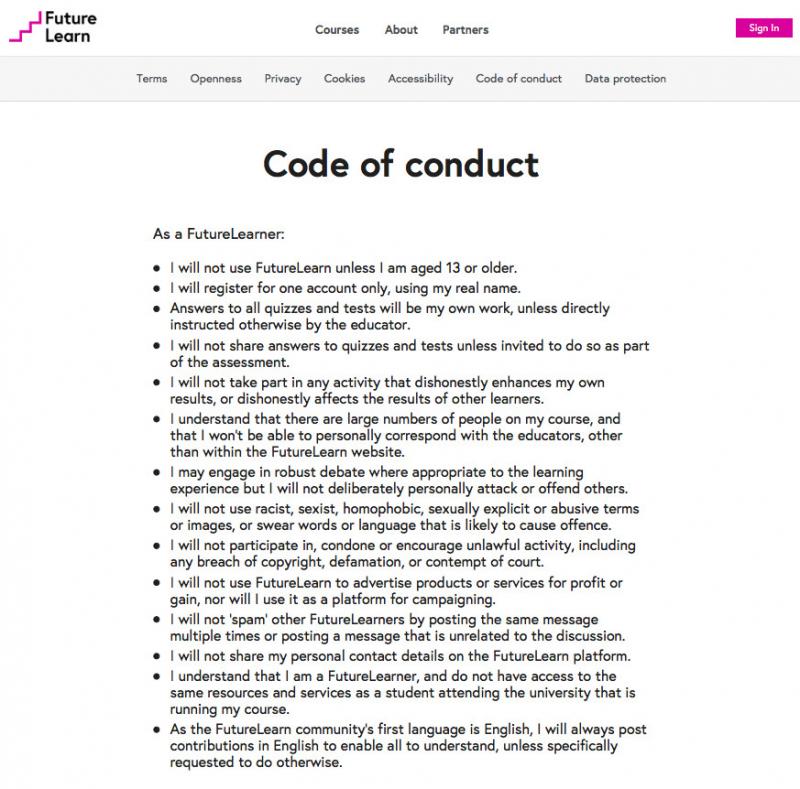

First, reform advocates call for rewriting opaque rules in plain accessible language. Vagueness currently allows inconsistent and even discriminatory application.

Policy logic should be explained fully so creators understand where lines are drawn. Content should only be removed with specific evidence of tangible harm, not just perceived offense.

Accountability also requires YouTube to detail exactly why particular videos get flagged. Blind vague references to “community standards” help no one.

Similarly, the penalty system needs more gradation between first offenses and channel deletion. Scaled consequences would prevent knee-jerk nuclear options.

Many also demand an improved appeals process where human reviewers genuinely consider context when evaluating disputes. Currently, appeals seem reflexively rubber-stamped rather than thoughtfully assessed.

On demonetization, critics advocate policies focused narrowly on truly dangerous or reprehensible videos, rather than broad categories of edgy yet harmless content.

In general, YouTube must shift from opaque top-down control to collaborating with creators to develop fairer regulations. Communication and flexibility would ease tensions.

No moderation system will satisfy all. But increasing clarity, consistency and transparency would demonstrate YouTube is listening to its community. Understanding that heavy-handedness has costs shows wisdom.

Comparisons to competitors like Twitch with more creator-friendly systems

As anger builds, YouTube is also now being compared unfavorably to rival platforms perceived as offering more creator-friendly content moderation.

For example, many cite streamer hub Twitch as doing a better job balancing openness with enforcement. Twitch certainly has issues, but is seen as more transparent and reasonable.

Twitch policies seem focused narrowly on dangerous behaviors rather than preemptive censorship. Streamers receive detailed explanations for removals and get opportunities to reform before permanent bans.

Twitch moderators also appear to exercise more human judgment contextualizing grey areas. YouTube’s rules allow less room for discretion in assessing borderline content.

In addition, Twitch grants streamers an open line to discuss enforcement actions directly with staff. YouTube’s process remains opaque in comparison.

Overall, Twitch has cultivated an ethos understanding that contentious opinions often need airing rather than suppression. YouTube by contrast takes a more paternalistic approach.

This highlights that better moderation practices are possible at scale. Critics cite Twitch when accusing YouTube of hiding behind neutrality to conceal unaccountable overreach.

Of course, comparisons are imperfect given the platforms’ different challenges. But YouTube should acknowledge valid critiques by implementing reforms towards greater transparency, consistency and collaboration.

If change seems unlikely, frustrated creators could continue migrating to alternatives perceived as less controlling.

How YouTube could reform policies to protect free speech better

While YouTube faces no easy decisions, critics argue there are reforms that could make moderation fairer and provide more protection for free expression.

First, community guidelines should be rewritten for clarity, eliminating confusing legalese and laying out a precise roadmap of unacceptable behaviors.

Moderators also need enhanced training to properly weigh context when reviewing borderline content. Knee-jerk deletions must be replaced by thoughtful human assessments.

The appeals process similarly requires an overhaul. Creators deserve robust reconsideration from unbiased evaluators, not copy-paste denials. Some external oversight could help.

Notification systems must improve as well. Vague references to “policy breaches” should be replaced by thorough explanations tied to specific violations.

Penalties could also be modernized to include intermediate steps like temporary video removals or partial monetization limits. Jumping straight to channel deletion helps no one.

Additionally, criteria for demonetization should be narrowed to unambiguously dangerous material, not just subjects arbitrarily deemed “sensitive.”

More Creator Liaisons at YouTube would also ease tensions by providing a valuable human point of contact when disputes arise.

Finally, incorporating input from diverse creators when drafting policies could help balance perspectives beyond just corporate caution.

With care and wisdom, YouTube can remove truly harmful content while welcoming voices outside the mainstream. It just requires embracing nuance over control.

Conclusion – weighing the pros and cons of YouTube’s strict policies

In closing, YouTube’s code of conduct raises difficult tradeoffs between safety and openness. While the platform must balance competing interests, critics make compelling points that current policies lean too far towards control.

More restrictive moderation does help advertisers feel secure and limits truly dangerous content. But too much preemptive censorship risks suppressing voices outside the mainstream, chilling creativity.

YouTube deserves some deference as a private company, but its ubiquity also makes it an important public forum. Excessive control risks homogeneity and dull conformity.

With care, YouTube can still permit provocative ideas while prohibiting truly reprehensible behaviors like violence and hate. But the site’s scaled approach currently lacks nuance.

Flaws in opaque processes and inconsistent enforcement also feed suspicions that YouTube favors some political viewpoints over others when applying rules.

In fairness, no solution perfectly reconciles the tensions. But reforms promoting transparency, accountability and collaboration would help ease understandable frustrations.

YouTube grew dominant by empowering individual creators, not controlling them. Wise reform should play to the platform’s strengths by erring towards freedom wherever possible.

If change seems unlikely internally, competition from more creator-centric platforms could force YouTube to rethink its more authoritarian instincts. The site’s future depends on getting this balance right.